Schema Mount

The YANG Schema Mount feature is defined in RFC 8528.

Schema Mount Overview

This section introduces the YumaPro server implementation of the Schema Mount feature.

YANG Schema Mount is a new server standard defined in RFC 8528. It allows a server to support multiple instances of the same YANG model.

A YANG module is designed to define API features for a single server.

RPC operations

Notification Events

Top-Level Schema Nodes

YANG Schema Mount allows the definitions from one module to be rooted somewhere in the schema tree of another module, instead of the top-level.

This allows multiple instances of the mounted YANG module to be supported in the server, which is very useful for supporting the mount point as a logical server

The top-level is the traditional server containing all top-level and all mounted YANG models

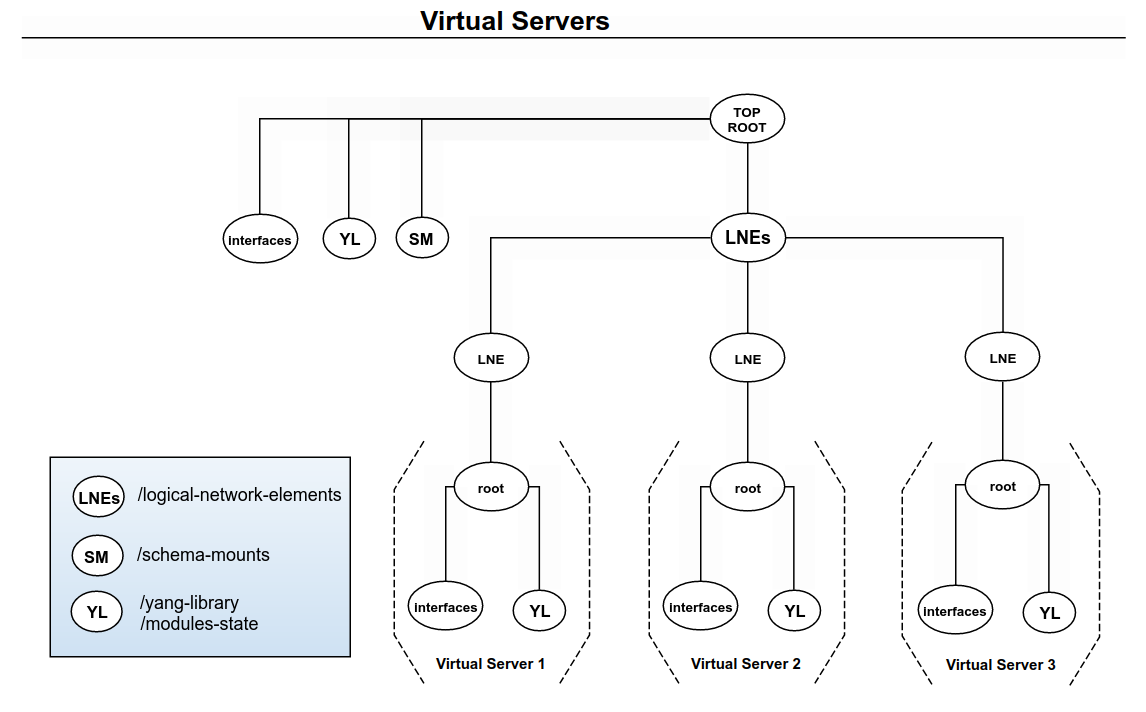

It is possible to treat each mount-point as a separate Virtual Server, although this is not part of the YANG Schema Mount standard at all

The definitions within the mounted YANG module do not change:

The “functional” semantics of the YANG definitions stay the same within the mount-point

The “per-instance” semantics for the mounted modules is defined by the top-level YANG module containing the mount point

The YANG Library (RFC 8525) is used to identify the YANG modules that are used within a mount point

This module is used within the main server and all mount points

There are 3 usage scenarios for any given YANG module:

Appears in top-level schema tree only

Appears in mounted-schema tree only

Appears in both top-level and mounted schema trees

Schema Mount Example

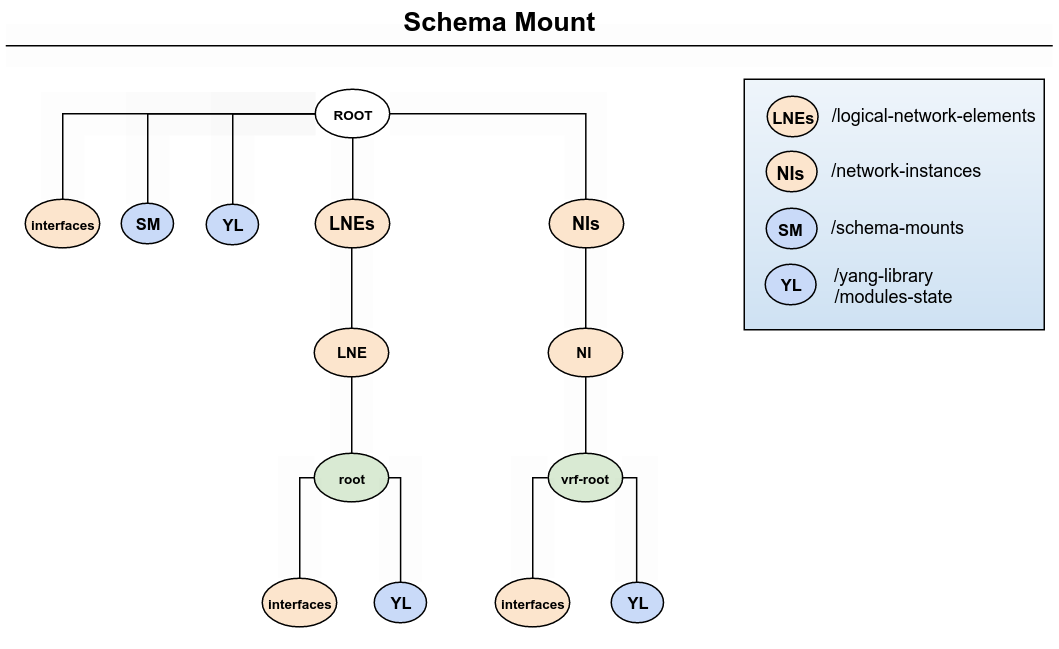

RFC 8528 contains a simple example of a schema mount (corrected diagram shown)

+--rw interfaces

| +--rw interface* [name]

| ...

+--rw logical-network-element* [name]

+--rw name

| ...

+-- mp root

+--rw interfaces

+--rw interface* [name]

...

The “ietf-interfaces” module is being used in the top-level server and also as a mounted module, within each logical-network-element (LNE). The LNE definition in RFC 8530 has a container named “root”:

This is treated as the root “/” in the mounted schema as well as the root of a Virtual Server for the mount point

Note

No changes to the mounted module are made (or needed) at all.

Complete Reuse is an important feature of Schema Mount.

Schema Mount Terminology

The following terms are used in this document:

Host Server (HS): indicates the “real” netconfd-pro server which has visibility to all top-level schema and all mounted schema

Mount Point (MP): a container or a list node whose definition contains the "mount-point" extension statement.

The argument of the"mount-point" extension statement defines a label for the mount point.

Only one label can be assigned to a mount point node.

A mount point refers to the schema tree, not any specific data node instance of the mount point object.

Mount Point Identifier: is a unique tuple representing a mount point for configuration purposes, It consists of a tuple containing 2 strings:

module name of the module containing the real container or list data node that will be used as a mount point. If a mount-point is defined in a grouping, then the module with the final "uses" statement defines the real data node.

label of the Mount Point

Mount Point Instance (MPI) is a data node instance of a mount point. All mounted data nodes have this node as its parent. Every mount point instance is represented by the following information:

object node path identifier

set of list key leafs for any ancestor list entries. Note that any mount point list keys are part of this set.

If a mount-point is a YANG list object, then it will appear to be a container to any mounted objects and data nodes.

Mount Point Instance Descriptor (MPID) is a data structure representing a specific MPI. Refer to the ncx_sm_mpid_t Access section in the developer manual for details.

Mounted Schema: a schema rooted at a mount point

Parent Schema: a top-level schema which contains the mount point for one or more mounted schema, within the server

Schema: a collection of YANG schema trees with a common root

Schema Mount (SM): the mechanism to combine data models defined in RFC 8528

Schema Mount Aware: SIL or SIL-SA callback functions that are aware the module is mounted within a mount point and not really a top-level module. There are server APIs to access the MPID information so schema-mount aware callbacks can determine the ancestor keys and mount point object.

Top-level Schema: a schema rooted at the root node and visible in the Host Server only

Location: the server schema tree is divided into separate locations.

All of the top-level modules comprise one location

Each implemented mount point is a separate location

There are configuration and implementation restrictions regatding the use of a YANG module in multiple locations.

The YANG Library in each location is independent

The modules, bundles, revisions, features, deviations, annotations, and other parameters can be configured for each location.

Mount Point

The ietf-yang-schema-mount.yang module defines the 'mount-point extension.

extension mount-point {

argument label;

description

"The argument 'label' is a YANG identifier, i.e., it is of the

type 'yang:yang-identifier'.

The 'mount-point' statement MUST NOT be used in a YANG

version 1 module, neither explicitly nor via a 'uses'

statement.

The 'mount-point' statement MAY be present as a substatement

of 'container' and 'list' and MUST NOT be present elsewhere.

There MUST NOT be more than one 'mount-point' statement in a

given 'container' or 'list' statement.

If a mount point is defined within a grouping, its label is

bound to the module where the grouping is used.

A mount point defines a place in the node hierarchy where

other data models may be attached. A server that implements a

module with a mount point populates the

'/schema-mounts/mount-point' list with detailed information on

which data models are mounted at each mount point.

Note that the 'mount-point' statement does not define a new

data node.";

}

A mount point is defined by using this extension as a sub-statement directly within a container or list statement for a data node.

Note

The server only supports mount points that are defined within configuration data nodes.

Virtual Toaster Example

The following tutorial module shows how a mount point is defined:

module vtoaster {

yang-version 1.1;

namespace "urn:yumaworks:params:xml:ns:vtoaster";

prefix vtoast;

import ietf-yang-schema-mount {

prefix yangmnt;

}

revision 2023-02-20 {

description "Initial version.";

}

container vtoasters {

list vtoaster {

yangmnt:mount-point "toaster";

key id;

leaf id {

type uint8 {

range "1 .. max";

}

description

"Identifier for each virtual toaster.";

}

}

}

}

In this example:

The mount point identifier is

{ 'vtoaster', 'toaster' }The mount point root object is the node

/vtoasters/vtoasterA mount point instance (MPI) must include an ancestor key leaf for node

/vtoasters/vtoaster/id.If the instance desired is 'id=2' then the instance-identifier for the MPID would be

/vtoasters/vtoaster[id=2]If there is a mounted top-level data node named 'topnode' then an instance of this object for the MPID might be

/vtoasters/vtoaster[k=2]/topnode

Server Usage Procedure

There are some basic steps needed for a server to utilize Schema Mount:

A server needs to know which YANG modules should be mounted at a given mount-point

A mount-point is identified by a label name

There is no standard to configure this information so a proprietary YANG module and XML or JSON configuration file are needed. See the sm-config Structure section for details.

After the top-level YANG modules are loaded into the server, the Schema Mount configuration is examined and the mount-points are “filled in” with the configured modules

The server will generate a YANG Library instance for each mount point

Each mount point instance has a YANG library in the subtree

/modules-state

The server will generate the Schema Mount Monitoring module contents (ietf-yang-schema-mount.yang)

This is visible in the top-level schema tree only

Client Usage Procedure

There are some basic steps needed for a client to utilize Schema Mount:

The client must check if the ietf-yang-schema-mount is supported in the top-level YANG Library

If so, the client must read the

/schema-mountscontainer to determine the schema mount points on this serverFor each mount point the client must read the data available at the mount point

The YANG library instance in the mount point will list the YANG modules that should be mounted

The client must maintain internal data structures such that the mounted modules appear as child node of the mount point (container or list)

Subtle Schema Mount Features

There are some aspects of Schema Mount that are either undefined or poorly defined, and some behavior that is not obvious:

If the mount point is within a “config false” node, or it is itself a “config false” node then all mounted YANG modules are treated as “config false”, overriding the mounted YANG module definitions

An RPC operation within a mount-point can be accessed as an “action” instead of an “RPC”

A notification within a mount point can be generated as if the notification-stmt was located within the mount point (container or list), instead of the top-level. YANG 1.1 defines mechanisms to encode such notifications within the specified data nodes

Each mount point instance defines a separate tree for YANG validation

The path statements in the mounted YANG module are treated as if the expression root “/” is really the mount-point

YANG validation of mounted schema has to be done in the mount point, even if the write access is from the top-level server

Metadata defined in YANG modules (E.g., extension, identity) is only accessible within the mount point in which it is defined

A parent module is required to use YANG 1.1. It is an error to use the “mount-point” extension within a YANG 1.0 module

A given container or list can only have 1 mount-point extension-stmt. It is an error if more than one such statement is present in the same container or list

For “shared schema”, different instances of the mount point MUST have the same schema mounted

Core Functionality

The core SM functionality includes all supported RFC 8528 features described in the following section:

Edit and Retrieval operations for mounted Data Nodes from the HS

RPC operations on mounted schema from the HS

Notification delivery originating from mounted schema, sent from the HS

The Server Architecture will provide some new features:

Mounted schema can be supported from SIL or SIL-SA

Currently, not supported in PY-SIL

NACM Access Control will be updated to support mounted schema, as required in the RFC

RFC 8528 Features

Each section of the RFC contains specific requirements that are not listed, but referenced here:

Sec. 3.1: The mount-point extension will be supported

Sec. 3.3: Duplicate mount-points will be supported

Same label in different nodes causes the same modules at each mount-point

Multiple mount labels in the same mount point are combined

The Virtual Server is coupled to the specific node, not any particular mount label

Sec. 4: Nested mount points will not be supported

Mount points in mounted modules will cause a warning and be ignored

It is not clear yet if it will be possible (or useful) to mount the parent module in itself

Sec. 5: notifications sent from mounted-modules can be configured to be sent in the top-level server, as defined in this section

Sec. 6: NMDA YANG Library will be used if --with-nmda is used

The old /modules-state tree is present even if NMDA is enabled

If ‘false’ then only the old /modules-state subtree will be present

Sec. 7: NACM will support paths into the mounted data as defined in this section

Sec. 8: the split management scheme will be used

session to root server will see all data

session to a mount-point will see only that mount-point

Sec. 9: entire YANG module will be implemented

Unsupported Features

The Schema Mount RFC defines some mechanisms that will not be implemented unless sufficient customer demand exists to do so:

“parent-reference”: This mechanism allows XPath expressions in YANG statements within mounted modules to be evaluated in the parent XPath context instead of the mount-point context

This feature is very complex and unless actually needed is too much effort to implement and educate customers to use.

“inline” schema: The “shared-schema” mechanism defined in the RFC will be used. The “inline” mechanism will not be used. The “shared” mechanism uses the YANG Library to let the client discover the mounted modules. This method is more complete and already supported within NETCONF clients.

Schema Mount Data Node Example

It is possible to fully access data instances of mounted schema nodes by specifying the full path from the top-level root (in the Host Server). The XPath expression “rules” for YANG are relaxed to treat mounted schema nodes as child nodes of the parent container or list.

An edit or retrieval operation on mounted data is handled normally, as if the child nodes were properly defined in a YANG module.

Note

All top-level data nodes from all mounted modules appear as child nodes within the mount point container or list.

Within the mount point the mounted data appears to be top-level data

/ietf-interfaces:interfaces

Within the Host Server there are multiple distinct instances of ‘interfaces’, each in a different subtree.

Schema Mount RPC Operation Example

In this example the RPC “ex2-rpc” is defined in a mounted module.

The RPC is not available as an RPC in the Host Server

The RPC is converted to a YANG Action in order to use it within a Host Server

The RPC is available in each Virtual Server

module: sm-example2

rpcs:

+---x ex2-rpc

+---w input

| +---w start-msg? string

+--ro output

+--ro end-msg? string

In this example the RPC is converted to action /logical-network-elements/logical-network-element=1/root/ex2-rpc

Note

All top-level RPC operations from all mounted modules appear as nested operations within the mount point container or list.

RPC request converted to a YANG Action (used within a HS):

<rpc message-id="2" xmlns="urn:ietf:params:xml:ns:netconf:base:1.0">

<action xmlns="urn:ietf:params:xml:ns:yang:1">

<logical-network-elements xmlns="urn:ietf:params:xml:ns:yang:ietf-logical-network-element">

<logical-network-element>

<name>1</name>

<root>

<ex2-rpc xmlns="http://netconfcentral.org/ns/sm-example2">

<start-msg>test1</start-msg>

</ex2-rpc>

</root>

</logical-network-element>

</logical-network-elements>

</action>

</rpc>

Schema Mount Notification Example

RFC 7950 defines a nested notification-stmt, that allows data-specific notification events to be generated.

The message encoding is different for this type of notification. Notifications for mounted schema are treated as if they are nested notifications, when being sent from the HS.

Note

All top-level notifications from all mounted modules appear as nested notifications within the mount point container or list.

Notification generated within the HS for a mounted schema (encoded within data nodes):

<?xml version="1.0" encoding="UTF-8"?>

<notification xmlns="urn:ietf:params:xml:ns:netconf:notification:1.0">

<eventTime>2022-01-06T00:13:22Z</eventTime>

<logical-network-elements xmlns="urn:ietf:params:xml:ns:yang:ietf-logical-network-element">

<logical-network-element>

<name>1</name>

<root>

<netconf-session-start

xmlns="urn:ietf:params:xml:ns:yang:ietf-netconf-notifications">

<username>andy</username>

<session-id>4</session-id>

<source-host>127.0.0.1</source-host>

</netconf-session-start>

</root>

</logical-network-element>

</logical-network-elements>

</notification>

Backward Compatibility

The management system is expected to contain a mixture of client applications. Some will be “Schema Mount Aware” and others will not be aware of these “extra” schema nodes.

Clients that are not aware of the SM schema nodes will still encounter data nodes for these YANG objects.

The client will treat this data as “unknown children”.

A non-SM-aware client can experience these “unknown object” 2 ways:

Retrieval operations such as <get>, <get-config> or <get-data>

Notifications for mounted modules may contain unknown event type and unknown data nodes.

There is nothing that can or will be done about clients finding data nodes with no associated schema node.

This is part of the Schema Mount Standard: clients are expected to handle this situation.

(yangcli-pro will print a warning about the unknown node, and will use anyxml for the unknown nodes.)

NON Schema Mount Aware

A client which is not aware of SM or any mounted objects will only find the objects from LNE and NI and not the objects in the “ietf-interfaces”

The client will not expect to find the “interfaces” and YL data nodes as child nodes of “/logical-network-elements/logical-network-element/root”

Schema Mount Aware

A client which is aware of SM will retrieve the top-level YL and SM data and construct the schema trees based on the contents of the mounted modules

Mount Point

A client which uses a Virtual Server to a mount point will see only the YL and mounted modules

This usually appears to be a top-level server to any client

If this is a “config=false” mount point then all mounted data will be read only. A client must be SM-aware to know why the “config true” statements are being ignored in the mounted modules

Schema Mount CLI Parameters

Two new CLI parameters will be created to support the Schema Mount implementation.

--with-schema-mount Specifies whether the Schema Mount feature will be used.

The default will be ‘true’. This will cause the YANG modules and SIL code to be loaded.

Additional configuration will be required to actually cause any schema mount-points found in YANG modules to be used in any way.

The WITH_SCHEMA_MOUNT=1 make flag must be used for this feature to be available in the client or server

--sm-config Specifies the XML or JSON file to read which contains the configuration data needed to create the desired mount-points.

This is a mandatory parameter to use Schema Mount in the server if the --with-schema-mount parameter is set to 'true'.

This config file controls what modules and bundles are loaded into the YANG Library for each mount-point label.

If --with-schema-mount=true

If this parameter is set then the file must be found and be valid or the server will exit with an error. Otherwise the Schema Mount feature will not be enabled.

If --with-schema-mount=false

This parameter is ignored

Schema Mount YANG Modules

The following YANG modules are used in the Schema Mount feature:

sm-config Structure

This YANG structure is used by a server developer to configure the YANG modules that will be present in the server. A JSON or XML file containing this structure is read by server at boot-time. Refer to the --sm-config CLI parameter for more details.

Example sm-config JSON File

This example file could be used for the “sm-example” module in the previous section.

The server would have the following parameters in this example:

--module=sm-example

--sm-config=/opt/yumapro/etc/vs-example1.json

{

"yumaworks-schema-mount:schema-mount" : {

"sm-config" : [

{

"mp-module" : "sm-example",

"mp-label" : "example1",

"mp-config" : true,

"mp-cli" : {

"module" : [ "sm-example2" ]

}

}

]

}

}

BBF Network Manager Example

This example shows how the bbf-obbaa-network-manager can be used to schema mount the bbf-obbaa-pma-device-config module using the external interface specified in the previous section.

The BBF YANG module for network-manager, bbf-obbaa-network-manager has a container named “root”:

This is treated as the root “/” in the mounted schema as well as the root of a Virtual Server for the mount point.

The following modules are being used in the top-level server and also as a mounted module, within each Device list entry:

module ietf-interfaces

module ietf-ipfix-psamp

module bbf-obbaa-device-adapters

module bbf-obbaa-pma-device-config

module bbf-obbaa-module-library-check

BBF YANG Modules

module: bbf-obbaa-pma-device-config

+--rw pma-device-config

+---x align

| +---w input

| +---w force? identityref

+--ro alignment-state? enumeration

module: bbf-obbaa-device-adapters

+--rw deploy-adapter

| +---x deploy

| +---w input

| +---w adapter-archive? string

+--rw undeploy-adapter

+---x undeploy

+---w input

+---w adapter-archive? string

module: ietf-interfaces

+--rw interfaces

| +--rw interface* [name]

| +--rw name string

...

module: bbf-obbaa-network-manager

+--rw network-manager

+--rw managed-devices

+--rw device* [name]

+--rw name string

+--rw device-management

| +--rw type? string

| +--rw interface-version? string

| +--ro is-netconf? boolean

| ...

+--rw root

+--rw pma-device-config

| +---x align

| | +---w input

| | +---w force? identityref

| +--ro alignment-state? enumeration

+--rw deploy-adapter

| +---x deploy

| +---w input

| +---w adapter-archive? string

+--rw undeploy-adapter

| +---x undeploy

| +---w input

| +---w adapter-archive? string

+--rw interfaces

| +--rw interface* [name]

| +--rw name string

...

SM-Config File Example

This example file could be used for the “schema mount configuration.

The server would have the following parameters in this example:

--module= bbf-obbaa-network-manager

--sm-config=/opt/yumapro/etc/sm-obbaa.json

Example sm-obbaa.json:

{

"yumaworks-schema-mount:schema-mount" : {

"sm-config" : [

{

"mp-module" : "bbf-obbaa-network-manager",

"mp-label" : "root",

"mp-config" : true,

"mp-cli" : {

"module" : [

"ietf-interfaces",

"iana-if-type",

"ietf-ipfix-psamp@2012-09-05",

"bbf-obbaa-device-adapters@2018-08-31",

"bbf-obbaa-pma-device-config@2018-06-15",

"bbf-obbaa-module-library-check@2018-11-07"

],

"feature" : [

"ietf-ipfix-psamp:exporter",

"ietf-ipfix-psamp:meter"

],

"feature-enable-default" : "false"

}

}

]

}

}