Multi-threading Support (PTHREADS)

In the YumaPro SDK PTHREADS builds, POSIX multi-threading is supported with each session running in its own thread.

This page describes the functional specification for POSIX Threads support for the YumaPro netconfd-pro program.

PTHREADS Build Overview

The netconfd-pro supports single-threaded as well as multi-threaded processing.

In a single-threaded build, netconfd-pro executes all work in a single main control flow with a single entry point (the netconfd_run() loop in netconfd.c) and a single exit point.

A multi-threaded program has the same initial entry point, followed by many entry and exit points that run concurrently. The term "concurrency" refers to doing multiple tasks at the same time.

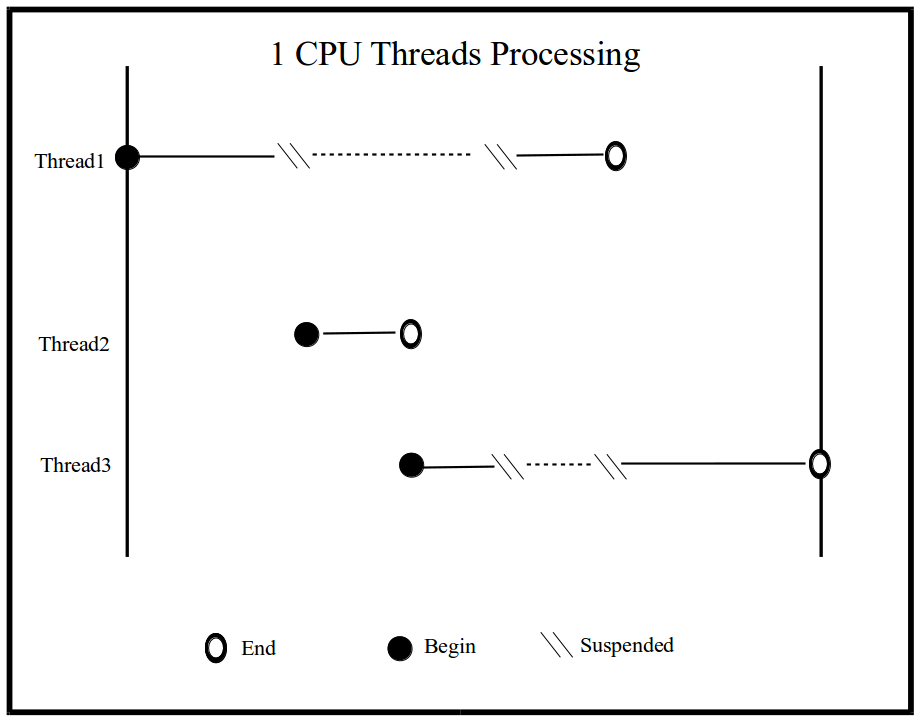

Single CPU Threads Processing

The netconfd-pro has built-in support for concurrent programming by running multiple threads concurrently within a single program.

A thread (sometimes called a lightweight process) is a single sequential flow of operations within a process, with a definite beginning and an end. A thread does not run on its own; it runs within a program.

The following figure shows a program with 3 threads running under a single CPU:

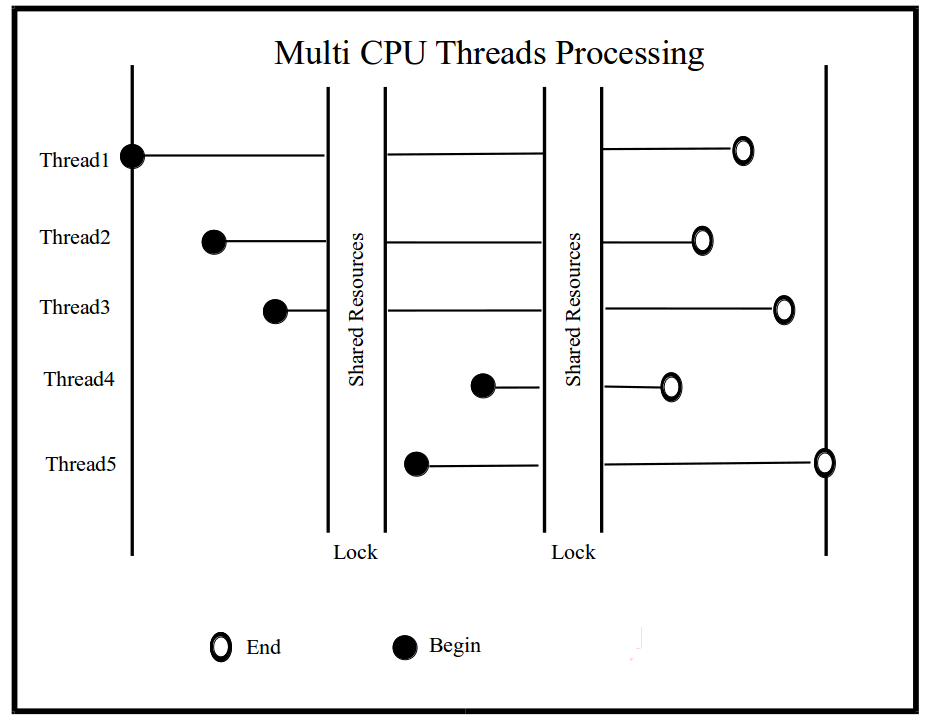

Multi-CPU Threads Processing

In a single-CPU machine, only one task can be executed at one time. In a multi-CPU machine, a few tasks can be executed simultaneously, either distributed among or time-slicing the CPUs.

The netconfd-pro server provides multitasking and multi-threading support for performance by making full use of computing resources. The server uses a co-operative multitasking system: each thread must voluntarily yield control to another thread. Shared resources are accessed by applying a lock; after the task is performed, the thread unlocks the resource so another thread can access it.

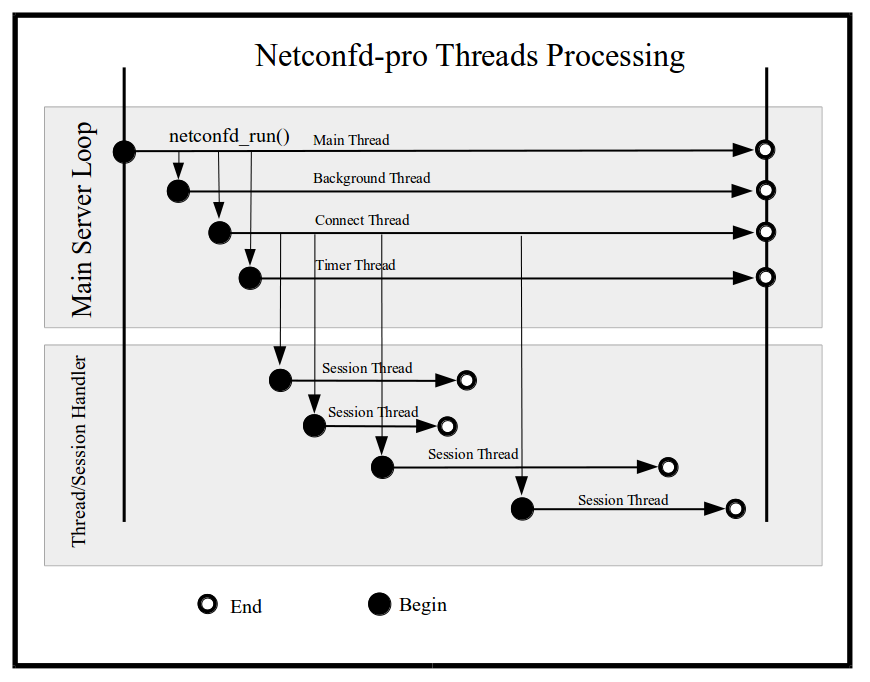

Thread Model in netconfd-pro

In PTHREADS builds, netconfd-pro uses multiple threads for different tasks. By default, the server allocates 4 threads responsible for various tasks, and it creates session threads per client session.

The server allocates the following threads:

Main thread: primarily used to check for shutdown events and signal handling.

Background thread: background thread that constantly checks if Confirmed Commit was cancelled.

Timer thread: single thread with primary responsibility to call timer service routine once a second.

Connect thread: allocate connection thread to listen for session requests. Listens to the socket to accept and create a new client session thread and create a file descriptor for a new session.

Session threads: receive thread/session handler. Check for I/O, explicit signal, or timeout.

The following diagram illustrates the main and default netconfd-pro threads processing mechanism.

Synchronization and Critical Sections

The server initializes multiple mutex locks and provides basic critical section primitives.

General-purpose critical section macros such as ENTER_CS and EXIT_CS are used for extended computational sections. These macros do not employ a spin lock and may be called recursively by the owning thread. The source code contains an example usage in heapchk.c.

In PTHREADS builds, the general-purpose critical section lock is implemented as a recursive mutex (PTHREAD_MUTEX_RECURSIVE). This allows the owning thread to enter the same critical section multiple times and requires the same number of exits to release the lock.

Lock Template (General-Purpose Critical Section)

The general-purpose critical section lock is implemented with the following properties:

Type: PTHREAD_MUTEX_RECURSIVE

File: thd.h

Macros: ENTER_CS / EXIT_CS (lock/unlock)

Mutex attribute template: thd_recursive_cs_mutex_attr

PTHREAD_MUTEX_RECURSIVE behavior:

A thread attempting to relock this mutex without first unlocking succeeds.

The relocking deadlock that can occur with PTHREAD_MUTEX_NORMAL cannot occur with this type of mutex.

Multiple locks of this mutex require the same number of unlocks to release the mutex before another thread can acquire it.

A thread attempting to unlock a mutex locked by another thread returns an error.

A thread attempting to unlock an unlocked mutex returns an error.

Concurrency vs. Datastore Updates

The use of multiple threads is in some ways an ideal solution to the problem of asynchronous I/O and asynchronous event handling in general, since events can be handled asynchronously and needed synchronization can be done explicitly (using mutexes).

It should be noted what operations and actions can be run concurrently and what cannot. Most operations can be run asynchronously, but it primarily depends on whether the current operation affects the datastore.

For example, if multiple client sessions are in progress and all attempt to write to the datastore, the server serializes the requests and applies them one after another to avoid datastore corruption.

Many operations can be processed concurrently across sessions. Only an internal critical section locking is applied for these cases, such as:

Since the operations above do not rely on the current datastore state, they can be done concurrently from multiple sessions.

Requests that affect and modify the datastore cannot be run at the same time. To avoid datastore corruption, the server serializes these requests and applies them one after another, including:

any operation that modifies the datastore

If edit-config is in progress, GET requests and other operations that rely on datastore state are held until the edit completes.