Software Overview

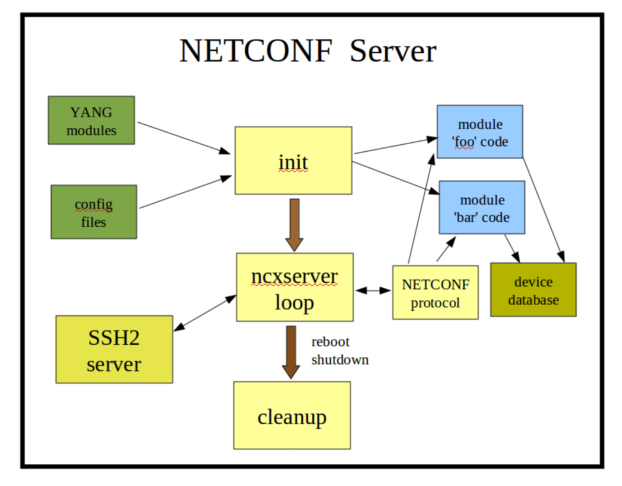

Server Design

This section describes the basic design used in the netconfd-pro server.

Initialization:

The netconfd-pro server will launch ypwatcher monitoring program, process the YANG modules, CLI parameters, config file parameters, and startup device NETCONF database, then wait for NETCONF sessions.

ncxserver Loop:

The SSH2 server will listen for incoming connections which request the 'netconf' subsystem.

When a new SSH session request is received, the netconf-subsystem-pro program is called, which opens a local connection to the netconfd-pro server, via the ncxserver loop.

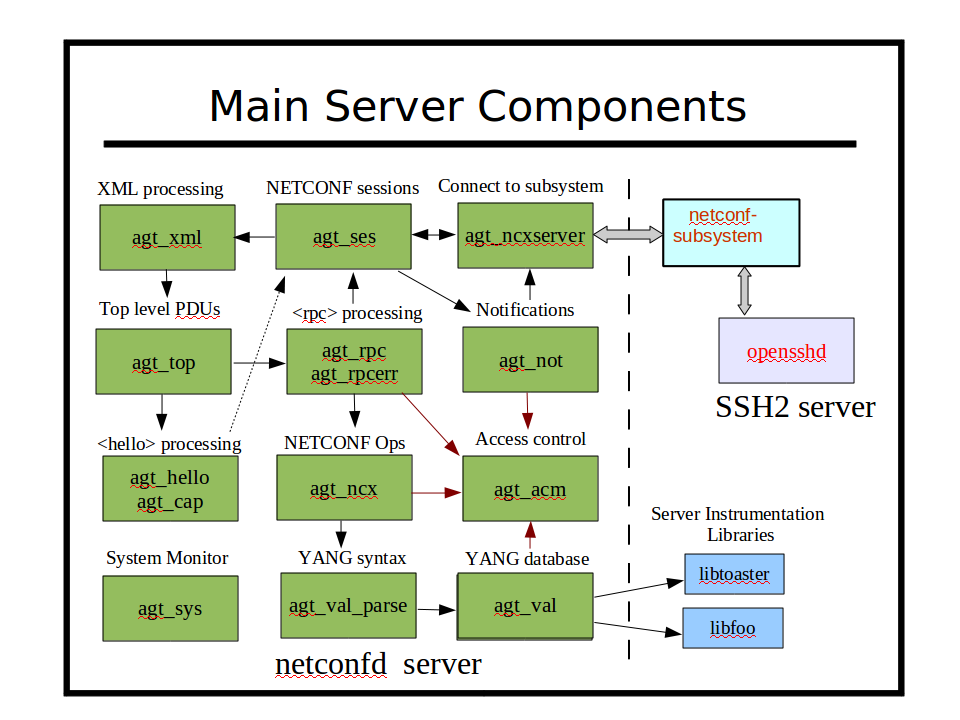

NETCONF <rpc> requests are processed by the internal NETCONF stack. The module-specific callback functions (blue boxes) can be loaded into the system at build-time or run-time. This is the device instrumentation code, also called a server implementation library (SIL). For example, for 'libtoaster', this is the code that controls the toaster hardware.

Cleanup:

If the <shutdown> or <reboot> operations are invoked, then the server will cleanup. For a reboot, the init cycle is started again, instead of exiting the program.

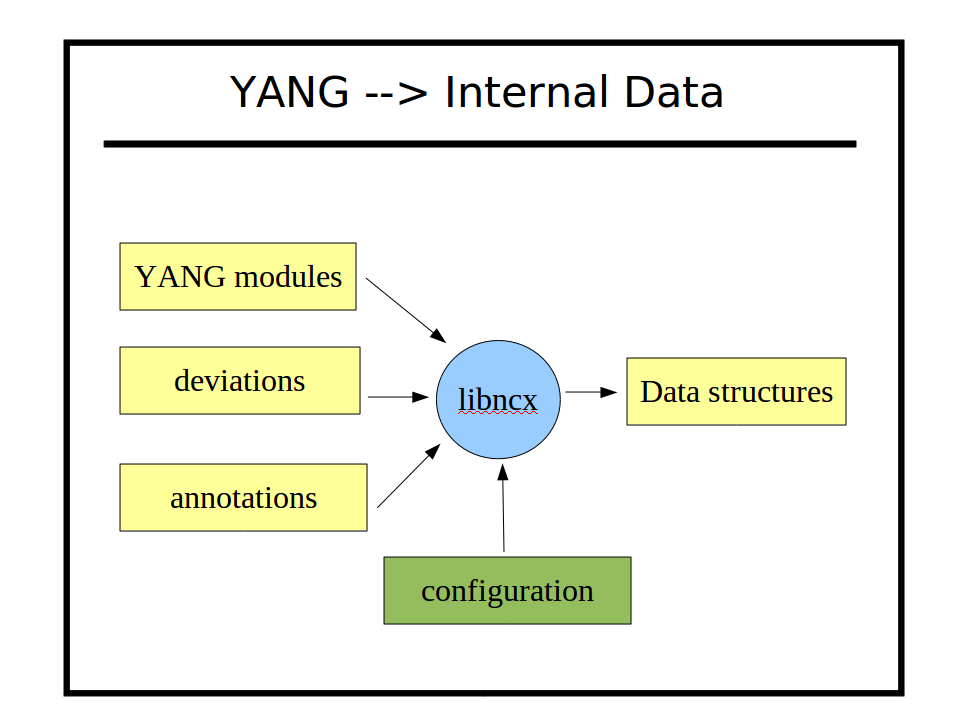

YANG Native Operation

YumaPro uses YANG source modules directly to implement NETCONF protocol operations automatically within the server. The same YANG parser is used by all YumaPro programs. It is located in the 'ncx' source directory (libncx.so). There are several different parsing modes, which is set by the application.

In the 'server mode', the descriptive statements, such as 'description' and 'reference' are discarded upon input. Only the machine-readable statements are saved. All possible database validation, filtering, processing, initialization, NV-storage, and error processing is done, based on these machine readable statements.

For example, in order to set the platform-specific default value for some leaf, instead of hard-coded it into the server instrumentation, the default is stored in YANG data instead. The YANG file can be altered, either directly (by editing) or indirectly (via deviation statements), and the new or altered default value specified there.

In addition, range statements, patterns, XPath expressions, and all other machine-readable statements are all processed automatically, so the YANG statements themselves are like server source code.

YANG also allows vendor and platform-specific deviations to be specified, which are like generic patches to the common YANG module for whatever purpose needed. YANG also allows annotations to be defined and added to YANG modules, which are specified with the 'extension' statement. YumaPro uses some extensions to control some automation features, but any module can define extensions, and module instrumentation code can access these annotation during server operation, to control device behavior.

There are CLI parameters that can be used to control parser behavior such as warning suppression, and protocol behavior related to the YANG content, such as XML order enforcement and NETCONF protocol operation support. These parameters are stored in the server profile, which can be customized for each platform.

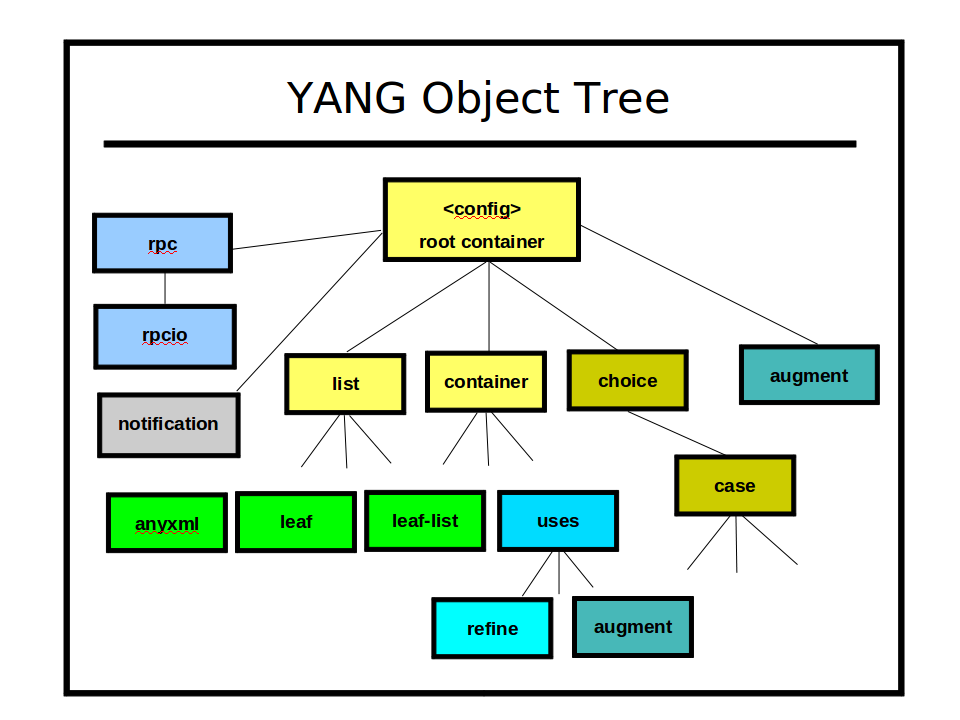

YANG Object Tree

The YANG statements found in a module are converted to internal data structures.

For NETCONF and database operations, a single tree of obj_template_t data structures is maintained by the server. This tree represents all the NETCONF data that is supported by the server. It does not represent any actual data structure instances. It just defines the data instances that are allowed to exist on the server.

Raw YANG vs. Cooked YANG:

Some of the nodes in this tree represent the exact YANG statements that the data modeler has used, such as 'augment', 'refine', and 'uses', but these nodes are not used directly in the object tree. They exist in the object tree, but they are processed to produce a final set of YANG data statements, translated into 'cooked' nodes in the object tree. If any deviation statements are used by server implementation of a YANG data node (to change it to match the actual platform implementation of the data node), then these are also 'patched' into the cooked YANG nodes in the object tree.

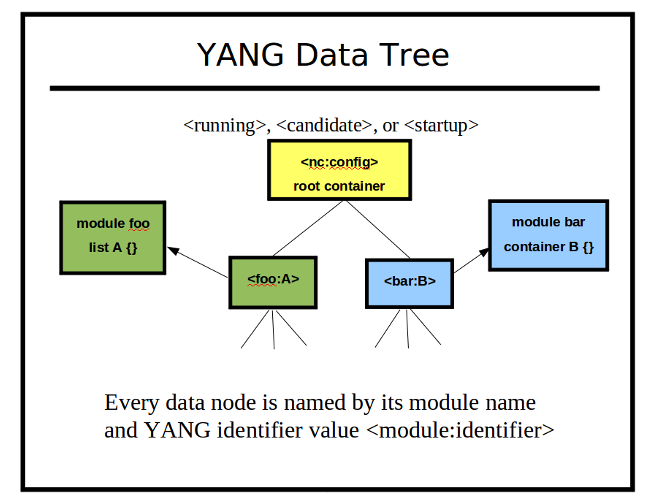

YANG Data Tree

A YANG data tree represents the instances of 1 or more of the objects in the object tree.

Each NETCONF database is a separate data tree. A data tree is constructed for each incoming message as well. The server has automated functions to process the data tree, based on the desired NETCONF operation and the object tree node corresponding to each data node.

Every NETCONF node (including database nodes) are distinguished with XML Qualified Names (QName). The YANG module namespace is used as the XML namespace, and the YANG identifier is used as the XML local name.

Each data node contains a pointer back to its object tree schema node. The value tree is comprised of the val_value_t structure. Only real data is actually stored in the value tree. For example, there are no data tree nodes for choices and cases. These are conceptual layers, not real layers, within the data tree.

The NETCONF server engine accesses individual SIL callback functions through the data tree and object tree. Each data node contains a pointer to its corresponding object node.

Each data node may have several different callback functions stored in the object tree node. Usually, the actual configuration value is stored in the database, However, virtual data nodes are also supported. These are simply placeholder nodes within the data tree, and usually used for non-configuration nodes, such as counters. Instead of using a static value stored in the data node, a callback function is used to retrieve the instrumentation value each time it is accessed.

Session Control Block

Once a NETCONF session is started, it is assigned a session control block for the life of the session. All NETCONF and system activity in driven through this interface, so the 'ncxserver' loop can be replaced in an embedded system.

Each session control block (ses_scb_t) controls the input and output for one session, which is associated with one SSH user name. Access control (see ietf-netconf-acm.yang) is enforced within the context of a session control block. Unauthorized return data is automatically removed from the response. Unauthorized <rpc> or database write requests are automatically rejected with an 'access-denied' error-tag.

The user preferences for each session are also stored in this data structure. They are initially derived from the server default values, but can be altered with the <set-my-session> operation and retrieved with the <get-my-session> operation.

Server Message Flows

The netconfd-pro server provides the following type of components:

NETCONF session management

NETCONF/YANG database management

NETCONF/YANG protocol operations

Access control configuration and enforcement

RPC error reporting

Notification subscription management

Default data retrieval processing

Database editing

Database validation

Subtree and XPath retrieval filtering

Dynamic and static capability management

Conditional object management (if-feature, when)

Memory management

Logging management

Timer services

All NETCONF and YANG protocol operation details are handled automatically within the netconfd-pro server. All database locking and editing is also handled by the server. There are callback functions available at different points of the processing model for your module specific instrumentation code to process each server request, and/or generate notifications. Everything except the 'description statement' semantics are usually handled

The server instrumentation stub files associated with the data model semantics are generated automatically with the yangdump-pro program. The developer fills in server callback functions to activate the networking device behavior represented by each YANG data model.

Main ncxserver Loop

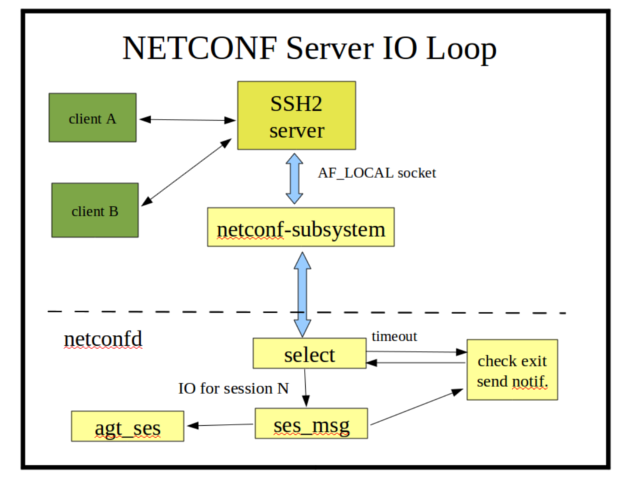

The agt_ncxserver loop does very little, and it is designed to be replaced in an embedded server that has its own SSH server:

A client request to start an SSH session results in an SSH channel being established to an instance of the netconf-subsystem-pro program.

The netconf-subsystem-pro program will open a local socket

/tmp/ncxserver.sockand send a proprietary <ncx-connect> message to the netconfd-pro server, which is listening on this local socket with a select loop (inagt/agt_ncxserver.c).When a valid <ncx-connect> message is received by netconfd-pro, a new NETCONF session is created.

After sending the <ncx-connect> message, the netconf-subsystem-pro program goes into 'transfer mode', and simply passes input from the SSH channel to the netconfd-pro server, and passes output from the netconfd-pro server to the SSH server.

The agt_ncxserver loop simply waits for input on the open connections, with a quick timeout. Each timeout, the server checks if a reboot, shutdown, signal, or other event occurred that needs attention.

Notifications may also be sent during the timeout check, if any events are queued for processing. The --max-burst configuration parameter controls the number of notifications sent to each notification subscription, during this timeout check.

Input <rpc> messages are buffered, and when a complete message is received (based on the NETCONF End-of-Message marker), it is processed by the server and any instrumentation module callback functions that are affected by the request.

When the agt_ncxserver_run function in agt/agt_ncxserver.c is

replaced within an embedded system, the replacement code must handle the

following tasks:

Call 'agt_ses_new_session' in

agt/agt_ses.cwhen a new NETCONF session starts.Call 'ses_accept_input' in

ncx/ses.cwith the correct session control block when NETCONF data is received.Call 'agt_ses_process_first_ready' in

agt/agt_ses.cafter input is received. This should be called repeatedly until all serialized NETCONF messages have been processed.Call 'agt_ses_kill_session' in

agt/agt_ses.cwhen the NETCONF session is terminated.The 'ses_msg_send_buffs' in

ncx/ses_msg.cis used to output any queued send buffers.The following functions need to be called periodically:

'agt_shutdown_requested' in

agt/agt_util.cto check if the server should terminate or reboot'agt_ses_check_timeouts' in

agt/agt_ses.cto check for idle sessions or sessions stuck waiting for a NETCONF <hello> message.'agt_timer_handler' in

agt/agt_timer.cto process server and SIL periodic callback functions.'send_some_notifications' in

agt/agt_ncxserver.cto process some outgoing notifications.

SIL Callback Functions

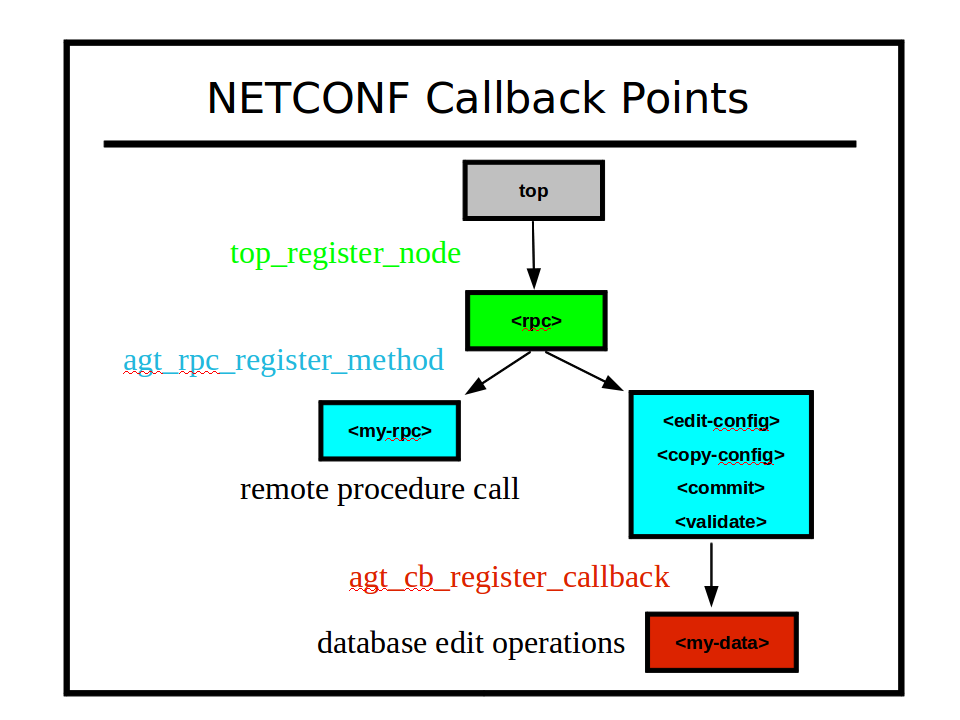

Top Level: The top-level incoming messages are registered, not hard-wired, in the server message processing design. The agt_ncxserver module accepts the <ncx-connect> message from netconf-subsystem-pro. The 'agt_rpc' module accepts the NETCONF <rpc> message. Additional messages can be supported by the server using the 'top_register_node' function.

All RPC operations are implemented in a data-driven fashion by the server. Each NETCONF operation is handled by a separate function in

agt/agt_ncx.c. Any proprietary operation can be automatically supported, using the 'agt_rpc_register_method' function.Note

Note: Once the YANG module is loaded into the server, all RPC operations defined in the module are available. If no SIL code is found, these will be dummy 'no-op' functions.

All database operations are performed in a structured manner, using special database access callback functions. Not all database nodes need callback functions. One callback function can be used for each 'phase', or the same function can be used for multiple phases. The 'agt_cb_register_callback' function in

agt/agt_cb.cis used by SIL code to hook into NETCONF database operations.

SIL Callback Interface

This section briefly describes the SIL code that a developer will need to create to handle the data-model specific details. SIL functions access internal server data structures, either directly or through utility functions. Database mechanics and XML processing are done by the server engine, not the SIL code. A more complete reference can be found in section 5.

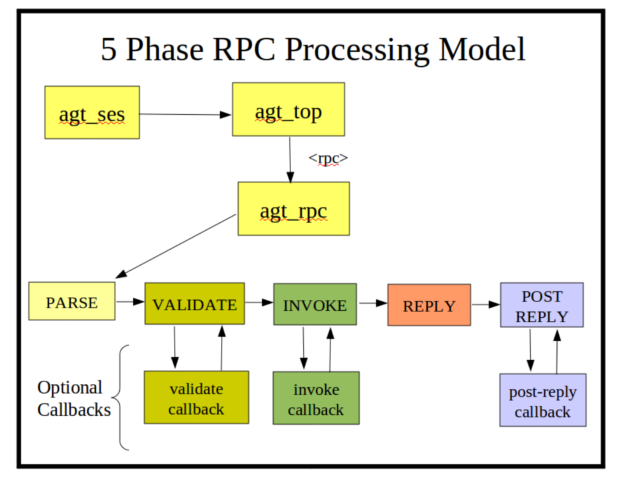

When a <rpc> request is received, the NETCONF server engine will perform the following tasks before calling any SIL:

parse the RPC operation element, and find its associated YANG rpc template

if found, check if the session is allowed to invoke this RPC operation

if the RPC is allowed, parse the rest of the XML message, using the 'rpc_template_t' for the RPC operation to determine if the basic structure is valid.

if the basic structure is valid, construct an rpc_msg_t data structure for the incoming message.

check all YANG machine-readable constraints, such as must, when, if-feature, min-elements, etc.

if the incoming message is completely 'YANG valid', then the server will check for an RPC validate function, and call it if found. This SIL code is only needed if there are additional system constraints to check. For example:

need to check if a configuration name such as <candidate> is supported

need to check if a configuration database is locked by another session

need to check description statement constraints not covered by machine-readable constraints

need to check if a specific capability or feature is enabled

If the validate function returns a NO_ERR status value, then the server will call the SIL invoke callback, if it is present. This SIL code should always be present, otherwise the RPC operation will have no real affect on the system.

At this point, an <rpc-reply> is generated, based on the data in the rpc_msg_t.

Errors are recorded in a queue when they are detected.

The server will handle the error reply generation for all errors it detects.

For SIL detected errors, the 'agt_record_error' function in

agt/agt_util.his usually used to save the error details.Reply data can be generated by the SIL invoke callback function and stored in the rpc_msg_t structure.

Replay data can be streamed by the SIL code via reply callback functions. For example, the <get> and <get-config> operations use callback functions to deal with filters, and stream the reply by walking the target data tree.

After the <rpc-reply> is sent, the server will check for an RPC post reply callback function. This is only needed if the SIL code allocated some per-message data structures. For example, the rpc_msg_t contains 2 SIL controlled pointers ('rpc_user1' and 'rpc_user2'). The post reply callback is used by the SIL code to free these pointers, if needed.

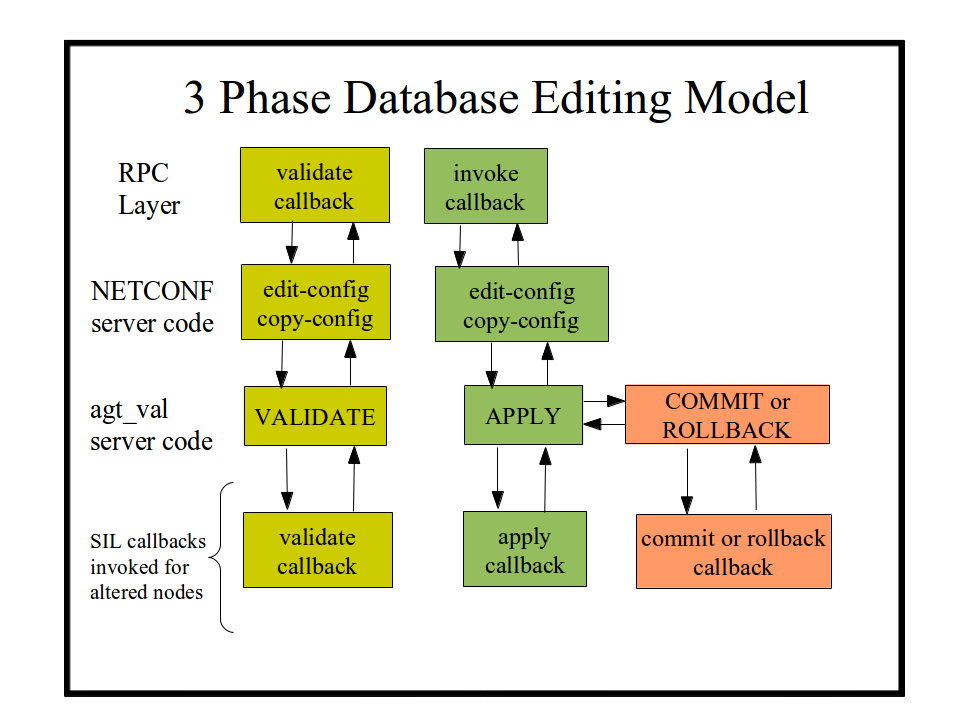

The database edit SIL callbacks are only used for database operations that alter the database. The validate and invoke callback functions for these operations will in turn invoke the data-model specific SIL callback functions, depending on the success or failure of the edit request.

Server Initialization

This section describes the server initialization sequence in some detail. There are several phases in the initialization process, and various CLI parameters are defined to control the initialization behavior.

The file netconfd-pro/netconfd-pro.c contains the initial 'main'

function that is used to start the server.

The platform-specific initialization code should be located in

/usr/lib/yumapro/libyp_system.so. An example can be found in

libsystem/src/example-system.c

The YANG module or bundle specific SIL or SIL-SA code is also initialized at startup if there are CLI parameters set to load them at boot-time.

Set the Server Profile

The agt_profile_t Struct defined in agt/agt.h is used to hold the server profile parameters.

The function 'init_server_profile' in agt/agt.c is called to set

the factory defaults for the server behavior.

The function 'load_extern_system_code' in agt/agt.c is called to

load the external system library. If found, the

agt_system_init_profile_fn_t callback is invoked. This allows the

external system code to modify the server profile settings.

Refer to the yp-system section of the YumaPro yp-system API Guide for more details.

Bootstrap CLI and Load Core YANG Modules

The 'ncx_init' function is called to setup core data structures.

This function also calls the 'bootstrap_cli' function in

ncx/ncx.c, which processes some key configuration parameters that

need to be set right away, such as the logging parameters and the module

search path.

The following common parameters are supported:

After the search path and other key parameters are set, the server loads some core YANG modules, including netconfd-pro.yang, which has the YANG definitions for all the CLI and configuration parameters.

External System Init Phase I : Pre CLI

The system init1 callback is invoked with the 'pre_cli' parameter set to 'TRUE'.

This callback phase can be used to register external ACM or logging callbacks.

Refer to yp_system_init1 for details on this callback.

Load CLI Parameters

Any parameters entered at the command line have precedence over values set later in the configuration file(s).

The following parameters can only be entered from the command line

The --config parameter specifies the configuration file to load.

The --no-config parameter specifies that the default configuration file

/etc/yumapro/netconfd-pro.confshould be skipped.The --help parameter causes help to be printed, and then the server will exit.

The --version parameter causes the version to be printed, and then the server will exit.

The command line parameters are loaded but not activated yet.

Load Main Configuration File

The --config parameter specifies a file or default configuration file is loaded.

The command line parameters are loaded but not activated yet. Any leaf parameters already entered at the command line are skipped.

The --confdir parameter specifies the location of a secondary configuration directory. This parameter can be set at the command line or the main configuration file.

The command line parameters are loaded but not activated yet.

Load Secondary Configuration Files

The --confdir parameter specifies the directory to check for extra configuration files.

Warning

DO NOT SET THIS PARAMETER TO THE SAME DIRECTORY AS THE MAIN CONFIGURATION FILE.

The default value is /etc/yumapro/netconfd-pro.d. This directory is

not created by default when the program is installed. It must be created

manually with the “mkdir” command.

All files ending in “.conf” will be loaded from this directory, if found.

The command line parameters are loaded but not activated yet.

Configuration Parameter Activation

After all CLI and configuration parameter files have been loaded they are applied to the agt_profile_t Struct and possibly other parameters within the server.

If the --version or --help parameters are entered, the server will exit after this step.

There are a small number of parameters that can be changed at run-time. Load the yumaworks-server.yang module to change these parameters at run-time.

External System Init Phase I : Post CLI

The system init1 callback is invoked with the 'pre_cli' parameter set to 'FALSE'.

This callback phase can be used to register most system callbacks and other preparation steps.

Load SIL Libraries and Invoke Phase I Callbacks

The --module CLI parameter specifies a module name to use for a SIL or SIL-SA library for a single module.

The --bundle CLI parameter specifies a bundle name to use for SIL or SIL-SA library for a set of modules.

The --loadpath CLI parameter specifies a set of directories to search for YANG modules, to load SIL and SIL-SA libraries from. Only modules not already loaded with --module or --bundle parameters will be loaded, if duplicate modules are found.

The init1 callbacks are invoked at this point. The SIL or SIL-SA code usually loads the appropriate YANG modules and registers callback functions.

External System Init Phase II : Pre-Load

The system init2 callback is invoked with the 'pre_load' parameter set to 'TRUE'.

This callback phase can be used to register an external NACM group callback and other preparation steps.

Refer to yp_system_init2 for details on this callback.

Load <running> Configuration

The --startup parameter specifies the XML file containing the YANG configuration data to load into the <running> datastore.

The --no-startup parameter specifies that this step will be skipped.

The --no-nvstore parameter specifies that this step will be skipped.

The --factory-startup parameter will cause the server to use the

--startup-factory-file parameter value, or the default

factory-startup-cfg.xml, or an empty configuration if none of these

files are found.

If none of these parameters is entered, the default location is checked.

If an external handler is configured by registering an 'agt_nvload_fn' callback, this this function will be called to get the startup XML file, instead of the --startup of default locations.

If --fileloc-fhs=true, then the default file

is /var/lib/netconfd-pro/startup-cfg.xml. If false, then the

default file is $HOME/.yumapro/startup-cfg.xml.

If the default file startup-cfg.xml is not found, then the file

factory-startup-cfg.xml will be checked, unless the

--startup-factory-file parameter is set.

If the --startup-prune-ok parameter is set to TRUE, then unknown data nodes will be pruned from the <running> configuration. Otherwise unknown data nodes will cause the server to terminate with an error.

If the --startup-error parameter is set to continue, then errors in

the parsing of the configuration data do not cause the server to

terminate, if possible.

If --startup-error is set to fallback then

the server will attempt to reboot with the factory default

configuration.

If the --running-error parameter is set to continue,

then errors in the root-check validation of the configuration

data do not cause the server to terminate, if possible.

Note

Some OpenConfig (and other) modules have top-level mandatory nodes. For these modules use the following parameters:

startup-error=continue

running-error=continue

Invoke SIL Phase II Callbacks

The phase II callbacks for SIL and SIL-SA libraries are invoked.

This callback usually checks the <running> datastore and activates and configuration found.

Operational data is initialized in this phase as well.

External System Init Phase II : Post-Load

The system init2 callback is invoked with the 'pre_load' parameter set to 'FALSE'.

This callback phase can be used to load data into the server.

Initialize SIL-SA Subsystems

At this point, the server is able to accept control sessions from subsystems such as DB-API and SIL-SA. The init1 and init2 callbacks will be invoked for SIL-SA libraries. The SIL-SA must register callbacks in the init1 callback so the main server knows to include the subsystem in edit transactions. Phase II initialization for SIL-SA libraries is limited to activating the startup configuration data.

Server Ready

At this point the server is ready to accept management sessions for the configured northbound protocols.

Server Operation

This section briefly describes the server internal behavior for some basic NETCONF operations.

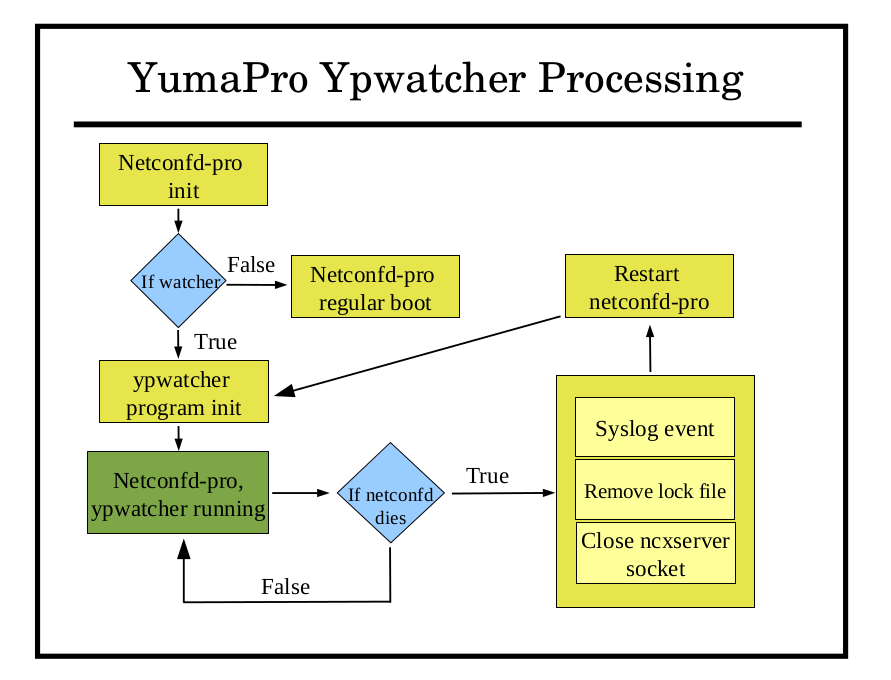

Ypwatcher processing

After modules definition and configuration parameters are loaded successfully, the ypwatcher program is called to start the monitoring process.

After 'agt_cli_process_input' function process netconfd-pro input parameters. In order to process ypwatcher specific parameters this function checks for the existence of the watcher parameters in the input.

The ypwatcher program will be called by the 'agt_init1' function by default unless --no-watcher parameter will be specified or the program is already running.

It is often useful in debugging to turn off the ypwatcher so it does not interfere with intentional stoppage (e.g., stop at breakpoint).

To disable yp-watcher:

netconfd-pro --no-watcher

The ypwatcher program is running continuously and attempting to restart the server any time it exits unexpectedly.

Unexpected exit can be interpreted as a server's shut down process due to severe error, such as Segmentation fault, core dump, bus error, and invalid memory reference. The ypwatcher program will restart the server only if any of these termination actions causing the server to shut down.

Loading Modules and SIL Code

YANG modules and their associated device instrumentation can be loaded dynamically with the --module configuration parameter. Some examples are shown below:

module=foo

module=bar

module=baz@2009-01-05

module=~/mymodules/myfoo.yang

-

xmlChar *ncxmod_find_sil_file(const xmlChar *fname, boolean generrors, status_t *res)

Determine the location of the specified server instrumentation library file.

- Parameters:

fname -- SIL file name with extension

generrors --

TRUE if error message should be generated

FALSE if no error message

res -- [out] address of status result

*res status

- Returns:

pointer to the malloced and initialized string containing the complete filespec or NULL if not found It must be freed after use!!!

The 'ncxmod_find_sil_file' function in

ncx/ncxmod.cis used to find the library code associated with the each module name. The following search sequence is followed:Check the $YUMAPRO_HOME/target/lib directory

Check each directory in the $YUMAPRO_RUNPATH environment variable or --runpath configuration variable.

Check the

/usr/lib/yumaprodirectory

If the module parameter contains any sub-directories or a file extension, then it is treated as a file, and the module search path will not be used. Instead the absolute or relative file specification will be used.

If the first term starts with an environment variable or the tilde (~) character, and will be expanded first

If the 'at sign' (@) followed by a revision date is present, then that exact revision will be loaded.

If no file extension or directories are specified, then the module search path is checked for YANG and YIN files that match. The first match will be used, which may not be the newest, depending on the actual search path sequence.

The $YUMAPRO_MODPATH environment variable or --modpath configuration parameter can be used to configure one or more directory sub-trees to be searched.

The $YUMAPRO_HOME environment variable or --yumapro-home configuration parameter can be used to specify the YumaPro project tree to use if nothing is found in the correct directory or the module search path.

The $YUMAPRO_INSTALL environment variable or default YumaPro install location (

/usr/share/yumapro/modules) will be used as a last resort to find a YANG or YIN file.

The server processes --module parameters by first checking if a dynamic library can be found which has an 'soname' that matches the module name. If so, then the SIL phase 1 initialization function is called, and that function is expected to call the 'ncxmod_load_module' function.

If no SIL file can be found for the module, then the server will load the YANG module anyway, and support database operations for the module, for provisioning purposes. Any RPC operations defined in the module will also be accepted (depending on access control settings), but the action will not actually be performed. Only the input parameters will be checked, and <or> or some <rpc-error> returned.

Core Module Initialization

The 'agt_init2' function in agt/agt.c is called after the

configuration parameters have been collected.

Initialize the core server code modules

Static device-specific modules can be added to the agt_init2 function after the core modules have been initialized

Any 'module' parameters found in the CLI or server configuration file are processed.

The 'agt_cap_set_modules' function in

agt/agt_cap.cis called to set the initial module capabilities for the ietf-netconf-monitoring.yang module

Startup Configuration Processing

After the static and dynamic server modules are loaded, the

--startup (or --no-startup) parameter is processed by

'agt_init2' in agt/agt.c:

If the --startup parameter is used and includes any sub-directories, it is treated as a file and must be found, as specified.

Otherwise, the $YUMAPRO_DATAPATH environment variable or --datapath configuration parameter can be used to determine where to find the startup configuration file.

If neither the --startup or --no-startup configuration parameter is present, then the data search path will be used to find the default

startup-cfg.xmlThe $YUMAPRO_HOME environment variable or --yumapro-home configuration parameter is checked if no file is found in the data search path. The

$YUMAPRO_HOME/datadirectory is checked if this parameter is set.The $YUMAPRO_INSTALL environment variable is checked next, if the startup configuration is still not found.

The default location if none is found is the

$HOME/.yumapro/startup_cfg.xmlfile.

It is a fatal error if a startup config is specified and it cannot be found.

As the startup configuration is loaded, any SIL callbacks that have been registered will be invoked for the association data present in the startup configuration file.. The edit operation will be OP_EDITOP_LOAD during this callback.

After the startup configuration is loaded into the running configuration database, all the stage 2 initialization routines are called. These are needed for modules which add read-only data nodes to the tree containing the running configuration. SIL modules may also use their 'init2' function to create factory default configuration nodes (which can be saved for the next reboot).

Process an Incoming <rpc> Request

PARSE Phase

The incoming buffer is converted to a stream of XML nodes, using the xmlTextReader functions from libxml2.

The 'agt_val_parse' function is used to convert the stream of XML nodes to a val_value_t structure, representing the incoming request according to the YANG definition for the RPC operation.

An rpc_msg_t structure is also built for the request.

VALIDATE Phase

If a message is parsed correctly, then the incoming message is validated according to the YANG machine-readable constraints.

Any description statement constraints need to be checked with a callback function.

The 'agt_rpc_register_method' function in agt/agt_rpc.c is used to register callback functions.

INVOKE Phase

If the message is validated correctly, then the invoke callback is executed.

This is usually the only required callback function.

Without an invoke callback, the RPC operation will no affect.

This callback will set fields in the rpc_msg_t header that will allow the server to construct or stream the <rpc-reply> message back to the client.

REPLY Phase

Unless some catastrophic error occurs, the server will generate an <rpc-reply> response.

If the session is dropped before this phase then no reply will be sent.

If any <rpc-error> elements are needed, they are generated first.

If there is any response data to send, that is generated or streamed (via callback function provided earlier) at this time.

Any unauthorized data (according to the access control configuration) will be silently dropped from the message payload.

If there were no errors and no data to send, then an <ok> response is generated.

POST_REPLY Phase

After the response has been sent, a rarely-used callback function can be invoked to cleanup any memory allocation or other data-model related tasks.

For example, if the 'rpc_user1' or 'rpc_user2' pointers in the message header contain allocated memory then they need to be freed at this time.

Edit the Database

Validate Phase

The server will determine the edit operation and the actual nodes in the target database (candidate or running) that will be affected by the operation.

All of the machine-readable YANG statements which apply to the affected node(s) are tested against the incoming PDU and the target database.

If there are no errors, the server will search for a SIL Validate callback function for the affected node(s).

If the SIL code has registered a database callback function for the node or its local ancestors, it will be invoked.

This SIL callback function usually checks additional constraints that are contained in the YANG description statements for the database objects.

Apply Phase

If the Validate Phase completes without errors, then the requested changes are applied to the target database.

This phase is used for the internal data tree manipulation and validation only.

It is not used to alter device behavior.

Resources may need to be reserved during the SIL Apply callback, but the database changes are not activated at this time.

Commit Phase

If the Validate and Apply Phases complete without errors, then the server will search for SIL Commit callback functions for the affected node(s) in the target database.

This SIL Commit callback phase is used to apply the changes to the device and/or network.

It is only called when a commit procedure is attempted.

This can be due to a <commit> operation, or an <edit-config> or <copy-config> operation on the running database.

Rollback Phase

If any error occurs during Validate, Apply, or Commit, the transaction is aborted and the server restores the target datastore to its pre-transaction state. Behavior depends on where the failure occurred:

Error in Validate Phase: Rollback is a no-op because no edits were applied yet. No SIL Rollback callbacks are invoked. That is why SIL callback cannot save any state that requires a callback in validate phase. It should be done in Apply or Commit Phases.

Error in Apply Phase: The server reverts changes made during Apply Phase and invokes SIL Rollback callbacks for affected nodes to release reserved resources.

Error in Commit Phase: The server performs Reverse Edits to restore the datastore and undo Commit Phase changes. Depending on the edit complexity, the server may (a) execute Reverse Edits for nodes that require logical undo and (b) invoke SIL Rollback callbacks for nodes that only need to reverse Apply Phase changes.

SIL Rollback callbacks are executed in reverse order to avoid dangling references.

During the Rollback Phase, the server searches for and invokes any registered SIL Rollback callback using the rollback operation. The SIL rollback handler is expected to release resources, stop services started during the failed commit, and return the platform to its prior state.

The same rollback procedure is used for :confirmed-commit that time out or are explicitly canceled by the client. In these cases, the server restores the backup snapshot taken at the start of the :confirmed-commit window.

Rollback is scoped to the transaction: only nodes affected by the failed edit set are reverted.

Reverse Edit

This is not an extra phase of the failed transaction.

The server performs a new internal edit transaction called Reverse Edit when edit(s) were applied and committed during the Commit Phase successfully, but then some other callback fails in the same Commit Phase.

The server invokes Validate -> Apply -> Commit callbacks for each Reverse Edit(s).

Each Reverse Edit operation is the inverse of the original operation (e.g., create -> delete, delete -> create, merge/replace -> restore previous value).

Reverse Edit(s) are executed in reverse order.

If a Reverse Edit cannot be fully completed (e.g., a SIL Rollback callback fails), the server falls back to restoring the pre-commit backup snapshot and reports the error in the <rpc-reply> with appropriate error-info.

Save the Database

The following bullets describe how the server saves configuration changes to non-volatile storage:

If the --with-startup=true parameter is used, then the server will support the :startup capability. In this case, the <copy-config> command needs to be used to cause the running configuration to be saved.

If the --with-startup=false parameter is used, then the server will not support the :startup capability. In this case, the database will be saved each time the running configuration is changed.

The <copy-config> or <commit> operations will cause the startup configuration file to be saved, even if nothing has changed. This allows an operator to replace a corrupted or missing startup configuration file at any time.

The database is saved with the 'agt_ncx_cfg_save' function in

agt/agt_ncx.c.The 'with-defaults' 'explicit' mode is used during the save operation to filter the database contents.

Any values that have been set by the client will be saved in NV-storage.

Any value set by the server to a YANG default value will not be saved in the database.

If the server create a node that does not have a YANG default value (E.g., containers, lists, keys), then this node will be saved in NV storage.

If the --startup parameter is used, then the server will save the database by overwriting that file. The file will be renamed to backup-cfg.xml first.

If the --no-startup parameter is used, or no startup file is specified and no default is found, then the server will create a file called 'startup-cfg.xml', in the following manner:

If the $YUMAPRO_HOME variable is set, the configuration will be saved in

$YUMAPRO_HOME/data/startup-cfg.xml.Otherwise, the configuration will be saved in

$HOME/.yumapro/startup-cfg.xml.

The database is saved as an XML instance document, using the <config> element in the NETCONF 'base' namespace as the root element. Each top-level YANG module supported by the server, which contains some explicit configuration data, will be saved as a child node of the <nc:config> element. There is no particular order to the top-level data model elements.

Server YANG Modules

Refer to the YumaPro YANG Reference document for details on the server YANG modules.

Built-in Server Modules

There are several YANG modules which are implemented within the server, and not loaded at run-time like a dynamic SIL module. Some of them are IETF standard modules and some are YumaPro extension modules.

The following IETF YANG modules are implemented by the server and cannot be replaced or altered in any way. Deviations and annotations are not supported for these modules either.

Note that this does not include the ietf-interfaces.yang module. To replace this module:

If the server is built from sources then do not use the EVERYTHING=1 or WITH_IETF_INTERFACES=1 make flags.

If a binary package is used then delete the SIL library for this YANG module usually named as

/usr/lib/yumapro/libietf-interfaces.so.Create new SIL or SIL-SA code for the replacement library. Install and enable as described in the Loading YANG Modules section.

Make sure to also load a version of the 'iana-if-type` module.

No 'netconfcentral' or 'yumaworks' modules may be modified or replaced.

Optional Server Modules

There are YANG modules which are implemented outside the server in order to improve modularity and provide a choice of modules that can supplement the server with a new feature at start up or at run time. Some of them are NETCONF standard modules and some are YumaPro extension modules.